Unity Material Property binding and the Lightmapper

Had an interesting one this week with Unity’s lightmapper, and trying to bind parameters to complex shaders. This is one of those problems which makes sense in hindsight, but isn’t clearly documented.

Treat this more like notes / thoughts then a walkthrough. I also won’t be sharing much code here, but will happily answer questions on my socials.

New Terrain

Been working on my first Terrain shader recently, building off of some existing tech on previous titles in the studio. Technical constraints mean we can’t use what’s become Unity’s defacto 3rd party terrain Mico/Mega Splat by Jason Booth. However, writing our own gives me the chance to learn which I always appreciate.

Terrain shaders follow a common theme, having multiple layers. These layers are defined by a splatmap or other vertex data. Each of these terrain layers often needs to function like it’s own shader with individual parameters. It also needs the ability to blend pleasingly between each layer in a performant fashion.

This can often mean lots of parameters. This is a pain for the artist as you can end up with very complex or sprawling interfaces. MegaSplat supports up to 256 layers for example, meaning that editing and moving this data around also needs some thought.

Our terrain so far has 8 layers, currently each layer has 8 unique parameters. That means 64 (plus terrain global) values need to get from somewhere, to our material…

The Data

We’re building our own interface either way, and what nicer way to store each layer’s worth of data in C# then in structs. Certainly preferable to iterate over 8 layers of data then unroll it in code (that’s the compilers job). So why not send down a ComputeBuffer to the shader then setting these parameters separately via Materal.Set###()?

This works quite neatly and we have a nice LayerBuffer in our shader to loop over. It’s also easy to maintain… I don’t really need to sell the benefits of this kind of programming.

But we’re using Shader Graph, all of this looping is not yet supported in nodes, neither is our ComputeBuffer. This isn’t too problematic as we can employ custom nodes with include files here, all handled without drama.

You need to ensure the StructuredBuffer and the struct is declared in an include file which is included with the shader graph shader.

struct LayerBuffer {

float displacement;

float height;

float heightAdd;

float useRgb;

float glossScalar;

float4 colorTintR;

float4 colorTintG;

float4 colorTintB;

};

uniform StructuredBuffer<LayerBuffer> LayerBuffer;LayerBuffer[layerId].height can then be sampled in your custom function for example.

_layerBuffer = new ComputeBuffer(8, (5 * 4) + (16 * 3);For completeness, we’d declare the buffer as above in C#. 8 entries long, 5 * 4 byte floats + 3 * 16 byte float4s. We don’t need to specify the final argument as it defaults to the type of buffer we want StructuredBuffer.

The last wrinkle (I thought) was setting the buffer on the material and keeping it set. When you’re binding properties on materials, any data is lost when in a couple of scenarios. Most notably;

- The shader is recompiled

- Scene is closed – subsequent reloading unsurprisingly results in having bind these values again.

- On when undoing changes to the material. This may well have been how I was applying the material during my tests.

For players at runtime these aren’t issues beyond initial setup. For edit-time iteration these are frustrations not normally present with Unity’s normal material workflow.

A Monobehavior with the [ExecuteAlways] attribute is the solution here. Then on OnEnable() and OnValidate() we can reset our properties, and in OnDisable() or OnDestroy() we can then perform our teardown as appropriate.

Lightmapping

With the working shader, now we lightmap… right? It seems that the lightmapper doesn’t get additional data bound to the material at runtime. Only serialized properties on assets on disk.

In this instance, before I’d bound my ComputeBuffer to my material, it rendered pure black. And this is what I was seeing in my light probes. On changing the shader to use a constant colour I was able to confirm quickly that indeed it was working.

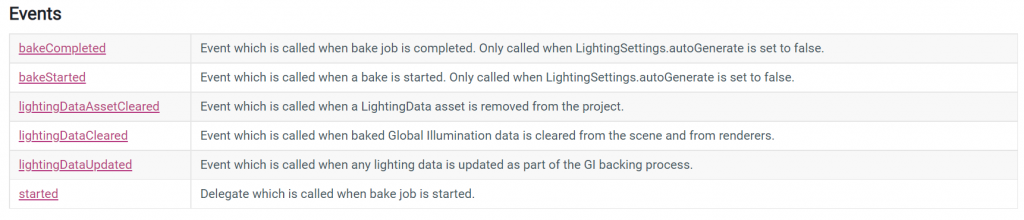

Unity’s Lightmapping class has some actions to subscribe to,

I’d tried binding this data on bakeStarted and lightingDataUpdated and didn’t have any success, which explains why I came to the conclusion above.

Macro #define

I initially questioned if it was ComputeBuffer bindings which were specifically the issue. I attempted to debug with data in arrays – didn’t work. Then data types which do serialize in the material as properties – still nothing.

Because I’m using Shader Graph, I wasn’t going to declare 64 properties as it’s still incredibly slow to update. Each additional property when added or connected seems to cause the shader to regenerate. I’ve seen artists at work build graphs which take up to 30 minutes to regenerate, assuming they’ve not crashed Unity through memory issues first.

This data needs to be specifically serialised – just using serialisable types isn’t enough. This didn’t stop me trying however. I had a brief look at ways to declare lots of uniforms using macros. And to my surprise something like the following works out well.

#define SETUP_LAYER_PROPERTIES(layerId) \ uniform float _Displacement##layerId; \ uniform float _Height##layerId; \ uniform float _HeightAdd##layerId; \ uniform float _UseRgb##layerId; \ uniform float _GlossScalar##layerId; \ uniform float4 _ColorTintR##layerId; \ uniform float4 _ColorTintG##layerId; \ uniform float4 _ColorTintB##layerId; \ SETUP_LAYER_PROPERTIES(0) SETUP_LAYER_PROPERTIES(1) SETUP_LAYER_PROPERTIES(2) SETUP_LAYER_PROPERTIES(3) SETUP_LAYER_PROPERTIES(4) SETUP_LAYER_PROPERTIES(5) SETUP_LAYER_PROPERTIES(6) SETUP_LAYER_PROPERTIES(7)

#define begins our statement, with SETUP_LAYER_PROPERTIES being our macro name, followed by the argument of layerId. \ backslash at the end of a line denotes a multi-line macro, and the ## being the concatenation operator which joins our property name and layerId.

There is a limitation to macro defines however. The compiler only unpacks compiler-time constants, not runtime variables. You can’t loop through these uniforms with an iterator to dynamically select the property. This means that to make these variables loop-friendly, you’ve still got to jump through some hoops which mainly means a lot of copy-pasta.

Learnt some new things about macros, but dead end here.

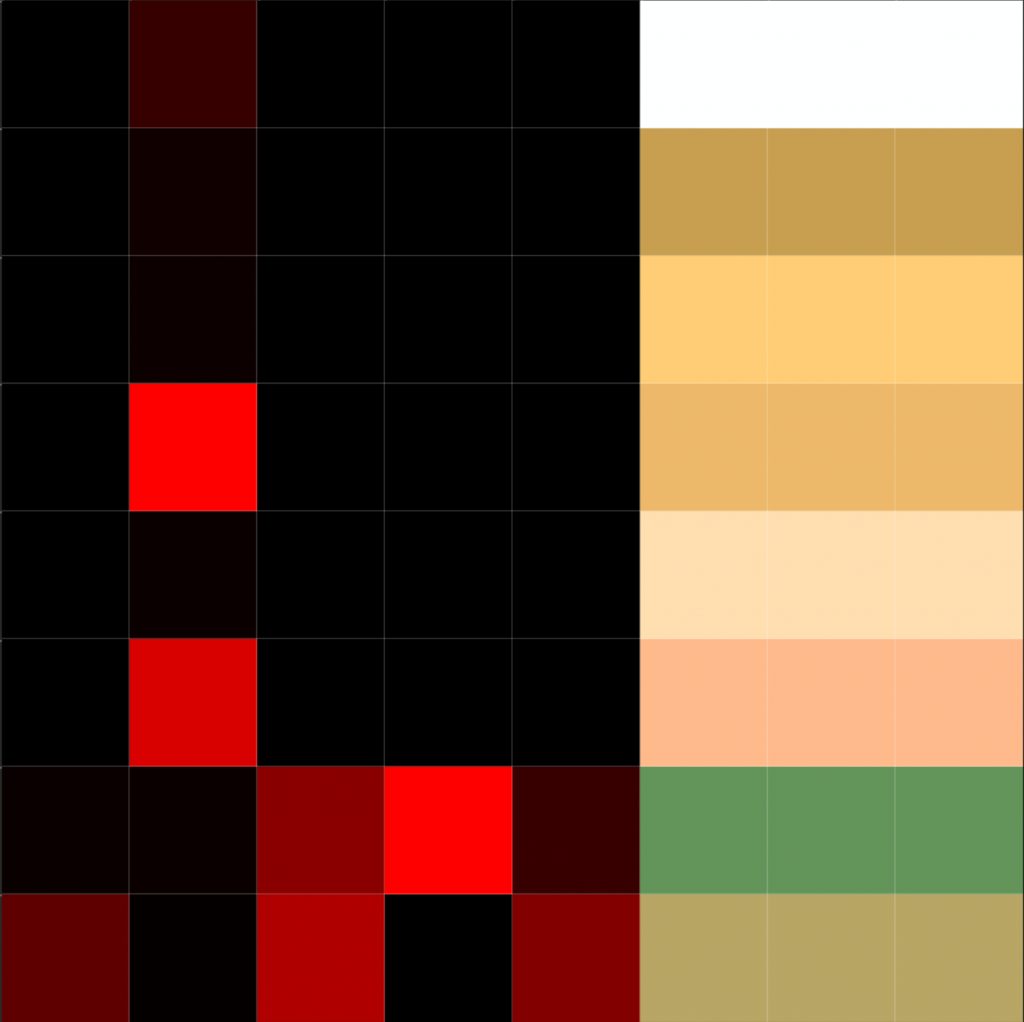

LUT or not to LUT

LUT (look-up-texture) as there’s no other sane way to do this. I could have just copy-pasted 64 values into a property block for now had I not been using Shader Graph.

One solution is move away from shader graph, something which I’ve done before. Despite repeated grumbling from be about it, Shader Graph is still faster and more accessible for me for now. The main advantage is that graphs auto upgrade with HDRP version changes, something which was a pain during I Am Fish. This was the issue which ultimately saw me go back to ShaderGraph.

The issue long-term however isn’t just about the ease of creating a lot of properties, it’s also maintaining them. There’s a good chance we don’t need to exceed 8 layers, but we’ll need more than the 8 parameters we have right now. In addition, the way these values are packed will almost certainly change. Having a boatload of properties is still not a nice solution.

I’d wondered why Micro/MegaSplat had relied so much on LUTs before. I’d assumed it was a down to something specific in that implementation which made it necessary. But now I know that it won’t work with the lightmapper unless it’s serialized to disk. I also don’t want to see the property block of a shader which has 256 layers of parameters, even if it’s dynamically created.

Seems there’s a reason why I don’t see ComputeBuffers in use as much. I still think I’ll be using them for other complex non-lightmapped shaders which benefit from structs of parameters.

I should have just started here, although I’m concerned with the performance of so many different samples of this texture. The 2nd and 3rd columns of this texture are sampled for every pixel for the height blending calculation. Perhaps these will need to be removed here and encoded in a friendlier method. But for now, as long as this texture is on disk, and assigned to our material, it uploads to the lightmapper.

I look forward to being able to share the results 😉